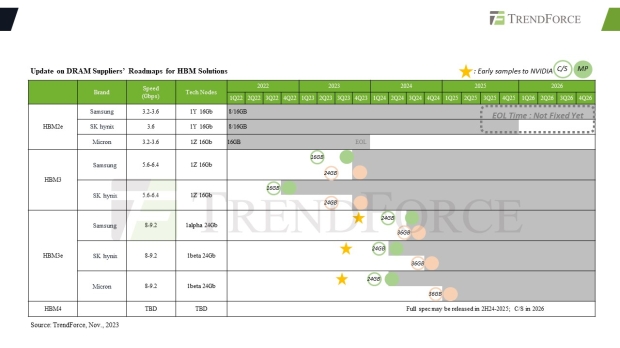

SK hynix has officially announced plans for its 5th Generation of High Bandwidth Memory (HBM) which is otherwise known as HBM3e, launching this year, while next-gen HBM4 memory is coming in 2026.

Kim Chun-hwan, vice president of SK hynix, announced the plans during his keynote speech at SEMICOM Korea 2024, which was hosted at the COEX in the Samseong neighborhood of Seoul, on January 31. VP Kim said: "With the advent of the AI computing era, generative AI is rapidly advancing" and that the "generative AI market is expected to grow at an annual rate of 35%".

We can expect SK hynix's new HBM4 memory to arrive with a 2048-bit memory interface, and a huge 1.5TB/s memory bandwidth per stack. The huge advances in AI GPUs from the likes of NVIDIA and AMD, and its next-gen AI GPUs of the future, will rely on ultra-fast next-gen memory like HBM4. SK hynix wants to have its next-gen HBM4 memory in 2026, but we could see it a bit earlier in late 2025.

DRAM supplier roadmaps (source: TrendForce)

Right now, we've got HBM3e memory stacks packing up to 1.2TB/sec memory bandwidth, with 6 stacks featuring a huge 7.2TB/sec of memory bandwidth. NVIDIA's upcoming H200 AI GPU will have up to 4.8TB/sec of memory bandwidth, so on a 2048-bit interface, we should expect HBM4 memory to boost over 1.5TB/sec per stack... and we can't wait.

- Read more: SK hynix announces next-gen HBM4 memory development kicks off in 2024

- Read more: NVIDIA to soak up most HBM supply for its AI GPUs, HBM4 is coming in 2026

- Read more: Samsung teases next-gen HBM4 for 2025: optimized for high thermal properties in development

SK hynix isn't the only one with HBM4 memory in development, with both Samsung and Micron both having HBM4 memory at the same time. Jaejune Kim, Executive Vice President, Memory, at Samsung, at the latest earnings call with analysts and investors said: "HBM4 is in development with a 2025 sampling and 2026 mass production timeline. Demand for also customized HBM is growing, driven by generative AI and so we're also developing not only a standard product, but also a customized HBM optimized performance-wise for each customer by adding logic chips. Detailed specifications are being discussed with key customers".