AMD has its new Instinct MI300X AI GPUs in its first deployment, with LaminiAI getting the first bulk order of Instinct MI300X AI accelerators late last week.

LaminiAI posted that the "next batch of LaminiAI LLM Pods" will feature AMD's latest CDNA 3-based AI accelerator, the new Instinct MI300X. In her post on social media, LaminiAI CEO and co-founder Sharon Zhou said: "The first AMD MI300X live in production. Like freshly baked bread, 8x MI300X is online. If you are building on open LLMs and you are blocked on compute, let me know. Everyone should have access to this wizard technology called LLMs. That is to say, the next batch of LaminiAI LLM pods are here".

LaminiAI will be using the vast amount of AMD Instinct MI300X AI GPUs to run large language models (LLMs) for enterprises, with the AI company partnering with AMD for AI hardware, so it should be expected that the company would have priority access to new AI accelerators like the Instinct MI300X.

This appears to be the first volume shipment of the Instinct MI300X from AMD, which is (another) major milestone for the company, and some better AI GPU technology to compete with NVIDIA and its H100 AI GPU.

It seems LaminiAI has multiple Instinct MI300X-based AI machines, with 8 x Instinct MI300X accelerators per system... that would be beautiful to see (and test). Zhou posted a screenshot of an 8-way AMD Instinct MI300X AI accelerator in a single LLM Pod. You can see that each Instinct MI300X AI GPU is using around 180W, which is probably sitting idle... as power consumption numbers will skyrocket with an 8-way AI GPU pod.

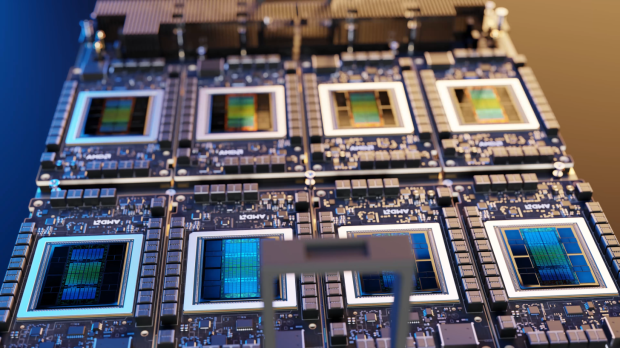

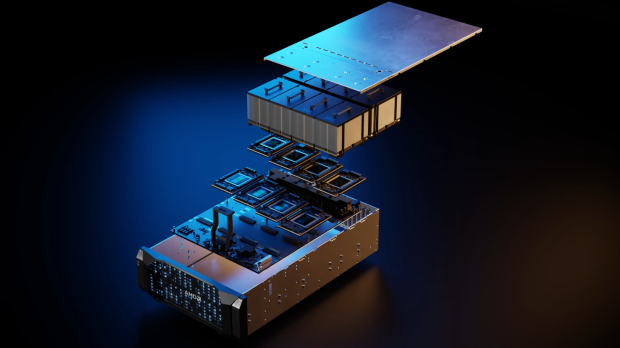

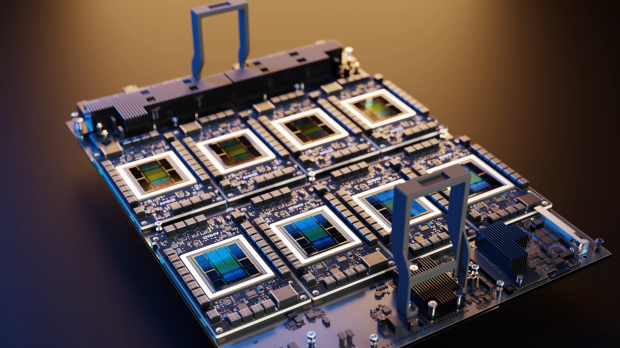

AMD's new Instinct MI300X is a technological marvel featuring chiplets and advanced packaging technologies from TSMC to craft the new AI GPU. We have the new CDNA 3 architecture, which has a blend of both 5nm and 6nm IPs that have up to an insane 153 billion transistors on the Instinct MI300X.

Inside, we have the main interposer laid out with a passive die that houses the interconnect layer using a 4th Gen Infinity Fabric solution, with the interposer featuring a total of 28 dies that include 8 x HBM3 packages, 16 dummy dies between the HBM packages, and 4 active dies with each of these active dies featuring 2 compute dies.

The CDNA 3-based GCD (Graphics Compute Die) features a total of 40 compute units, which works out to 2560 stream processors. There are 8 compute dies (GCDs) in total, with a grand total of 320 compute units and 20,480 stream processors. AMD wants the best yields possible (of course), so it is dialing it down a bit: 304 compute units (38 CUs per GPU chiplet), which will make for a total of 19,456 stream processors.

AMD's new Instinct MI300X featuring a huge 50% increase in HBM3 memory over its predecessor in the Instinct MI250X (192GB now versus 128GB). AMD is talking 8 x HBM3 stacks; each stack is 12-Hi, which features 16 Gb ICs with 2GB capacity per IC for 24GB per stack. 8 x 24 = 192GB HBM3 memory.

AMD's new Instinct MI300X, with its 192GB of HBM3 memory, will enjoy a huge 5.3TB/sec of memory bandwidth and 896GB/sec of Infinity Fabric bandwidth.

In comparison, NVIDIA's upcoming H200 AI GPU has 141GB of HBM3e memory with up to 4.8TB/sec of memory bandwidth, so AMD's new Instinct MI300X is definitely sitting very pretty against the H100, which has only 80GB of HBM3 with up to 3.35TB/sec of memory bandwidth.