The HBM market is one of the most important markets in the world right now, with the meteoric rise of AI powered by vast amounts of AI GPUs powered by HBM memory... including HBM3, HBM3e, and soon the next-gen HBM4 memory standard.

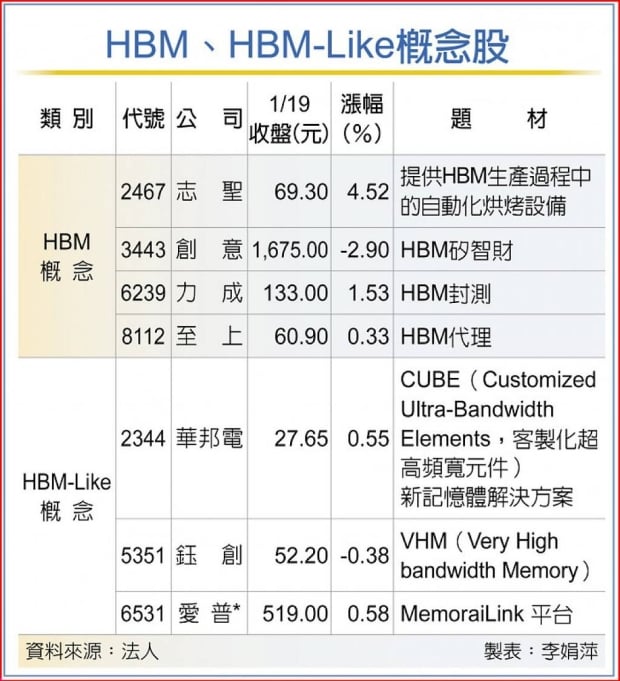

HBM and HBM-like technologies (source: Ctee)

Because of the dominance of AI GPUs, the HBM memory business is expected to double in market revenue in 2025 as there are more and more next-gen AI GPUs released. We've got the just-announced AMD Instinct MI300X AI GPU, as well as NVIDIA's beefed-up Hopper H200 AI GPU and their next-gen Blackwell B100 AI GPU coming out this year... all of which are powered by HBM.

Market research outlet Gartner reports that the HBM market will rocket up to $4.976 billion in revenue by 2025, which is close to double the HBM revenue of 2023. Right now, these numbers are based purely on current and anticipated demand from the industry, but things can change very quickly. Just look at the AI GPU demand right now; it's insatiable, and the big driver behind AI GPUs getting faster and faster is high-speed HBM memory.

HBM3e is virtually here on AI GPUs coming soon, but next-gen HBM4 is also in production and expected on next-gen AI GPUs in the near future. NVIDIA will be using HBM3e memory on its Hopper H200 and Blackwell B100 AI GPUs, with AMD also using HBM3e memory on its new Instinct MI300X AI GPU.

- Read more: NVIDIA spends big money on securing HBM3e for H200 and B100 AI GPUs

- Read more: SK hynix and Samsung are both sold out of their HBM3 memory until 2025

- Read more: NVIDIA to soak up most HBM supply for its AI GPUs, HBM4 is coming in 2026

SK Hynix, Samsung, and Micron are the big three when it comes to HBM memory, currently cooking HBM3e and HBM4 memory for AI GPUs. HBM chip packaging, IP, and HBM-like alternatives, including Windond's CUBE, Etron's VHM (Very High Bandwidth), and AP Memory's MemoraiLink, are also in the mix.

According to Ctee, some of the most important factories in Taiwan include HBM Silicon Intellectual Property, which provides automatic baking equipment used in the HBM production process, and Licheng provides HBM packaging and testing services, and HBM agent distributors.

Creative has reportedly been working with TSMC and SK Hynix to create the HBM3 CoWoS platform, using Creative's GLink-2.5D die-to-die interface and other silicon intellectual properties are used in HBM3, so they're big beneficiaries in the boom of the AI GPU business.

Licheng says that it is optimistic about the continued demand for HBM packaging and testing, ordering equipment in Q3 2023 in preparation for its March 2024 operation. After customer certification, mass production is expected by the end of 2024.